What Is the NIST AI Risk Management Framework Profile?

Also known as NIST AI 1001, this component specifies criteria that AI systems must meet to conform to accepted standards. It enables organizations to tailor their risk management approaches to specific AI scenarios, ensuring that these strategies are robust and also directly relevant and tailored.

What Are the 6 Steps of the NIST Risk Management Framework?

The NIST AI Risk Management Framework unfolds in six strategic steps that steer organizations through managing AI-related risks effectively:

- Categorize – Define and classify the system along with the information it handles based on potential impacts.

- Select – Pick foundational security measures recommended for the system’s level of risk.

- Implement – Execute these controls rigorously, validated through testing.

- Assess – Critically evaluate how these controls uphold security and privacy norms.

- Authorize – Make a risk-informed decision on whether the AI system should go live.

- Monitor – Maintain vigilance over the system, refining security as threats evolve.

Adhering to these steps also helps organizations fortify their defenses, ensuring that AI systems are deployed responsibly and remain secure.

Questions?

We can help! Talk to the Trava Team and see how we can assist you with your cybersecurity needs.

The Importance of Regular Updates

The field of AI is rapidly evolving. This means that the associated risks and the nature of these risks can change unexpectedly. However, the NIST framework is built to be dynamic, accommodating changes through regular updates that reflect new findings, emerging threats, and technological advancements. Organizations are encouraged to stay current with these updates to ensure their AI systems remain secure against new vulnerabilities.

Building a Culture of AI Safety

Implementing the NIST framework is not just about following a set of rules; it’s about fostering a culture of safety and responsibility. Organizations must also prioritize continuous education and training for their teams to understand and effectively implement AI risk management practices. This commitment to education helps create a knowledgeable workforce that can anticipate and mitigate risks before they become critical issues.

Embracing the NIST AI Risk Management Framework is vital for any organization that harnesses AI responsibly. It provides a structured, nuanced approach to managing the risks associated with AI technologies.

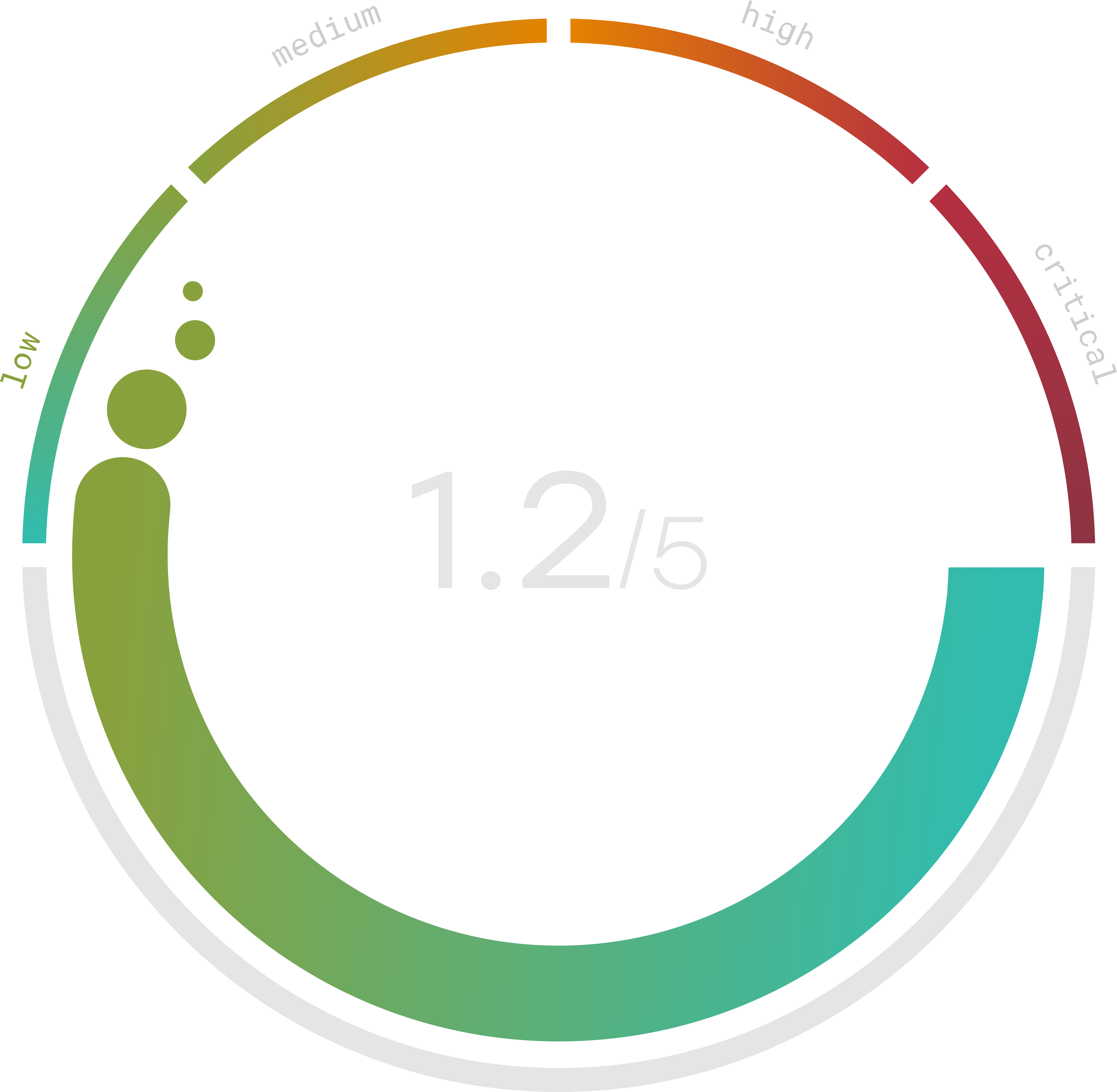

Do you know your Cyber Risk Score?

You can’t protect yourself from risks you don’t know about. Enter your website and receive a completely free risk assessment score along with helpful information delivered instantly to your inbox.